Saranya Vijayakumar

Ph.D. Candidate in Computer Science

Carnegie Mellon University

saranyav [at] andrew.cmu.edu

Research Vision

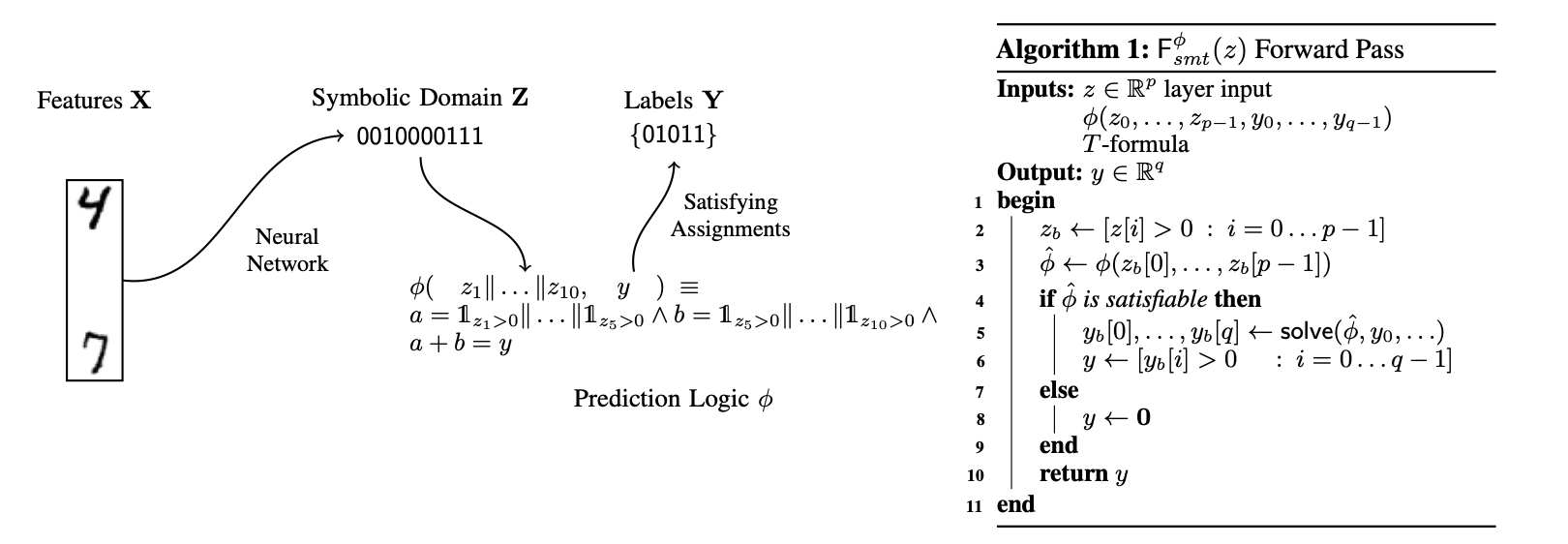

I work on making AI systems more secure, private, and understandable. My research combines formal verification and machine learning to address vulnerabilities in areas like fraud detection, secure code generation, and privacy-preserving protocols. I’m currently focused on (1) identifying and exploiting weaknesses through red teaming and jailbreaks, building tools that help us understand why these systems break, and how to make them safer; and (2) how security and aligment change under agentic conditions.

I am fortunate to be advised by Christos Faloutsos and Matt Fredrikson. Previously, I did my undergraduate at Harvard with a joint concentration in computer science and government, working with Cynthia Dwork and Jim Waldo on my thesis. After Harvard, I spent three years as an associate at Goldman Sachs before beginning my PhD. During my PhD, I have done projects at Inria (with Steve Kremer and Charlie Jacomme) and IBM Research (with Karthikeyan Ramamurthy and Erik Miehling).

My AI governance experience includes running National Security Policy at Harvard's Institute of Politics, graduate coursework at the Kennedy School, an internship at Booz Allen Hamilton, and research collaboration with Bruce Schneier at the Berkman Klein Center. At CMU, I served as a teaching assistant for Norman Sadeh's Security, Privacy and Public Policy course and I guest lecture for his AI governance class. I am supported by the Department of Defense National Defense Science and Engineering Graduate Fellowship through the Army Research Office.

Core research areas:

Research

Prototype-Integrated Representation Learning for Novelty Detection

IEEE TrustCom 2025Saranya Vijayakumar, Matt Fredrikson, Christos Faloutsos

PDFAICodeDetect: A Pipeline for Systematic Detection and Analysis of AI-Generated Code

IEEE MCSI 2025Saranya Vijayakumar, Philip Negrin, Christos Faloutsos

PDFMechanistically Interpreting a Transformer-based 2-SAT Solver

ICML 2025Nils Palumbo, Ravi Mangal, Zifan Wang, Saranya Vijayakumar, Corina Pasareanau, Somesh Jha

PDFAligned LLMs Are Not Aligned Browser Agents

ICLR 2025Priyanshu Kumar, Saranya Vijayakumar, Elaine Lau, Tu Trinh, Zifan Wang, Matt Fredrikson

PDFLeveraging Large Language Models for Enhanced Membership Inference

Under Review PoPETS 2026Saranya Vijayakumar, Matt Fredrikson, Norman Sadeh

Talks

LLM Security Vulnerabilities

AI Governance Course (17-416/17-716), March 31, 2025

- Applied security analysis techniques

- Real-world privacy challenges with LLMs

- Jailbreaking and watermarking

Security and Privacy in Practice

Information Security, Privacy & Policy (17-331/631), November 21, 2024

Slides- Applied security analysis techniques

- Real-world privacy challenges with LLMs

- Jailbreaking and watermarking

AI Security and Governance

AI Governance Course (17-416/17-716), April 3, 2024

- Current landscape of AI security challenges

- Intersection of technical capabilities and governance frameworks

- Emerging threats and mitigation strategies

AI Security, Robustness, and Privacy

Information Security, Privacy & Policy (17-331/631), December 5, 2023

- Overview of current challenges in AI security

- Discussion of robustness techniques and evaluation

- Privacy considerations in modern AI systems

Academic Service

Information Security, Privacy & Policy (17-331/631), Fall 2024

Final Project Judge

- Evaluated student projects on security and privacy implementations

- Provided technical feedback and industry-relevant insights

- Helped assess practical applicability of security solutions

Teaching

Teaching Philosophy

Teaching Statement PDFI believe in creating an inclusive learning environment that emphasizes practical understanding and critical thinking. My teaching approach combines theoretical foundations with hands-on experience, preparing students for both academic and industry challenges.

Teaching Experience

Information Security, Privacy & Policy (17-331/631)

Fall 2023Teaching Assistant

Instructors: Norman Sadeh and Hana Habib

Course Highlights:

- Masters-level course covering security and privacy fundamentals

- Led discussion sections on privacy policies and security frameworks

- Mentored student projects in privacy and membership inference analysis

Rapid Prototyping Technologies (15-294) & Intermediate Rapid Prototyping (15-394)

Spring 2023Teaching Assistant

Instructor: Dave Touretzky

Course Highlights:

- Taught both introductory and intermediate prototyping techniques

- Supervised hands-on laboratory sessions

- Provided technical guidance for student projects

Teaching Development

Eberly Center Future Faculty Program

Participant in Carnegie Mellon's teaching development program

- Completed intensive pedagogical training

- Developed evidence-based teaching strategies

- Created inclusive course design frameworks

Mentorship

-

High School Students:

Mentored Philip Negrin on AI Code Detection research (project video) and a second student on their research project.

-

Masters Students at CMU:

Guided a team of 4 students on privacy research analyzing Google's Topics API (USENIX PEPR '24).

Research Projects & Impact

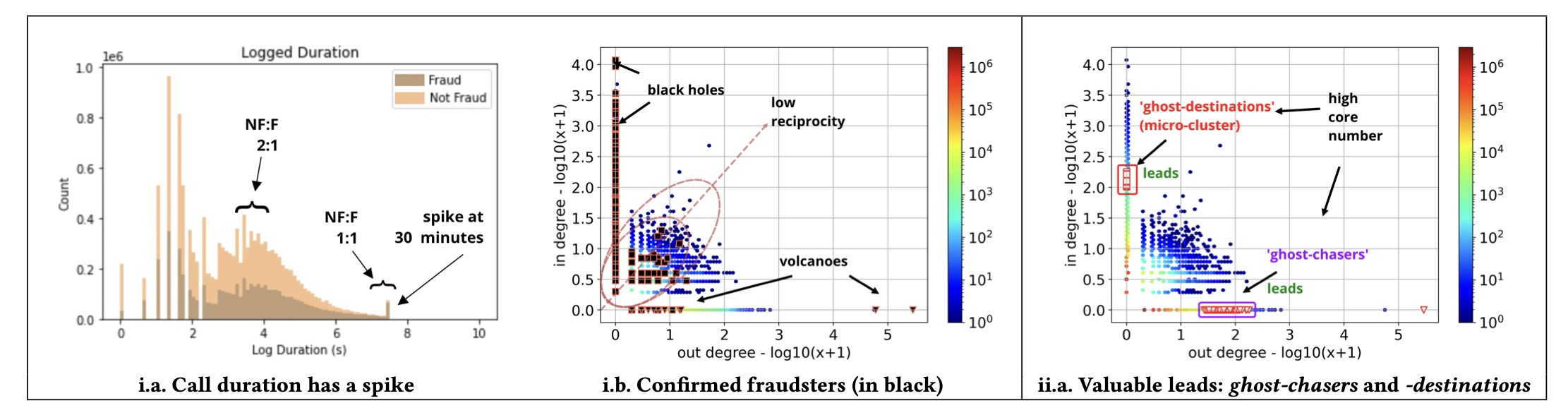

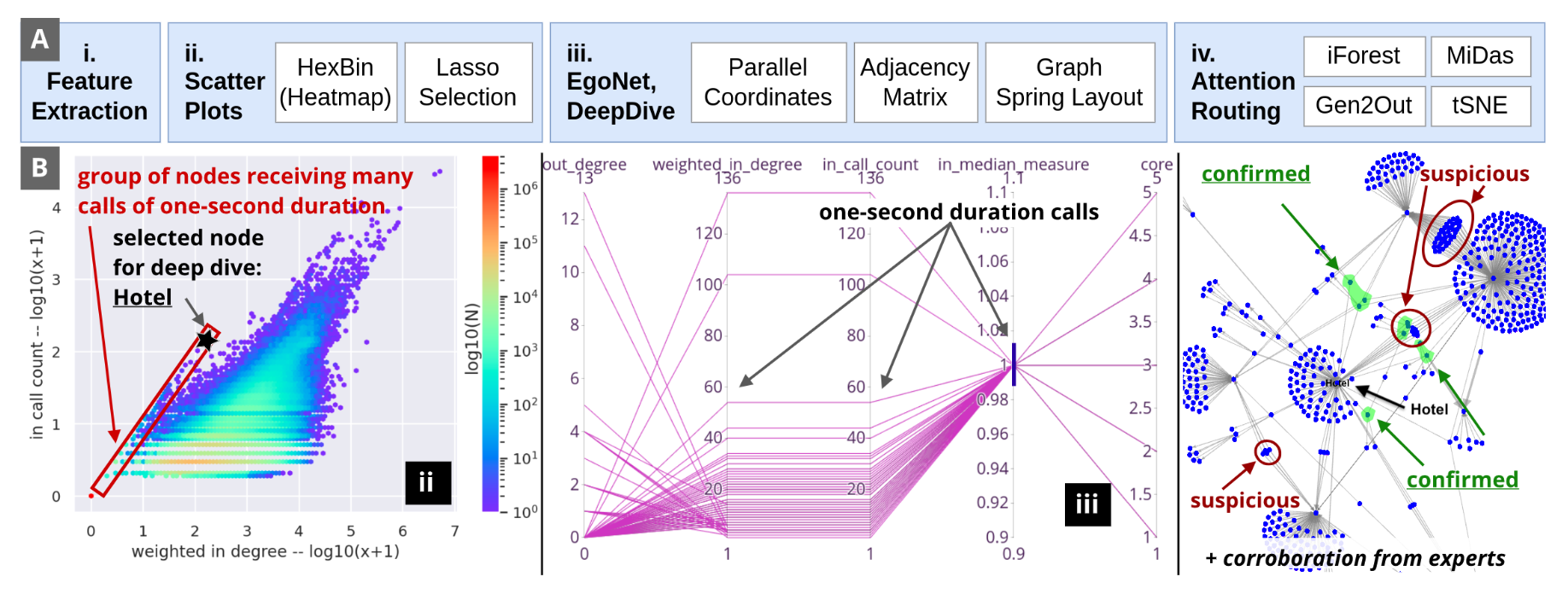

Large-Scale Fraud Detection Systems

TgrApp system visualization interface

Publications & Demonstrations

Developed novel visualization and detection methods for analyzing million-scale fraud patterns in telecommunication networks, leading to deployed solutions with real-world impact.

Agentic AI Security

Investigating security vulnerabilities that emerge when LLMs are deployed as autonomous agents, including browser agents and other agentic systems that interact with real-world environments.

Browser Agent Alignment Failures

Aligned LLMs Are Not Aligned Browser Agents (ICLR 2025)

Demonstrated that refusal-trained LLMs can be easily jailbroken when deployed as browser agents, revealing fundamental gaps in current alignment techniques for agentic systems.

Workshop Contributions

Refusal-trained LLMs Are Easy Jailbroken as Browser Agents

Priyanshu Kumar, Elaine Lau, Saranya Vijayakumar, Tu (Alina) Trinh, Scale Red Team, Elaine Chang, Vaughn Robinson, Sean Hendryx, Shuyan Zhou, Matt Fredrikson, Summer Yue, Zifan (Sail) Wang

Workshop PaperCurrent Research Focus

Exploring improving interpretability of agentic AI.

Formal Methods in Security

Research Visits & Collaborations

Inria Nancy

Formal verification of the Olvid messaging protocol using ProVerif

Constraint Programming

National Security Applications

- CSET Review: "Cybersecurity Risks of AI-Generated Code" for Georgetown University

- International Encryption Research - Featured in NBC News, Forbes, The Intercept

- Upcoming work on AI-generated code detection (Under Review at PAKDD 2025)

Algorithmic Fairness & Ethics

Public Impact & Research

Early work on fairness in algorithmic decision-making systems, combining technical analysis with policy implications.

Featured Article in Harvard Political Review

Undergraduate Thesis on Fairness Metrics in ML

Privacy-Preserving Technologies

Topics API Privacy Analysis

Investigating privacy vulnerabilities in Google's Topics API through novel LLM-based approaches

- Enhanced membership inference techniques

- Novel reidentification methods

- Privacy implications for web advertising

Under Review, 2025

Adversarial Privacy Attacks

PEPR 2024 PublicationNovel techniques for evaluating and enhancing privacy protections in modern web APIs

Service & Leadership

Academic Community Building

Outreach & Impact

Implemented healthcare technology solutions with Partners in Health, Lima, Peru

Led computer science education programs in Boston public schools

Awards & Recognition

Graduate Fellowship for STEM Diversity (GFSD)

NSA (Declined)

National Defense Science & Engineering Graduate Fellowship

Army Research Office

Future Faculty Program

Carnegie Mellon University Eberly Center

Research & Industry Experience

Research Scientist, Quantitative Trading

2018 - 2021Goldman Sachs - New York, NY

Research Contributions

- Developed novel statistical methods for analyzing market microstructure, processing 100M+ daily trading records to identify systematic patterns in algorithmic execution

- Led research initiatives on latency-sensitive distributed systems, resulting in peer-reviewed internal publications on network optimization

- Architected real-time analytics pipelines using Python and KDB/Q, implementing novel algorithms for trade execution optimization

Systems & Infrastructure

- Designed distributed computing framework for processing market data streams across multiple data centers

- Implemented machine learning models for real-time trade classification and risk monitoring

- Built visualization tools for analyzing high-dimensional financial data, later adopted across multiple trading desks

Data Scientist

2022Beto O'Rourke Senate Campaign

Technical Leadership

- Developed machine learning models for voter behavior prediction using demographic data

- Helped organize grassroots campaign's efforts to set up new headquarters with Distribution Team

Research Impact

- Identified key counties for high-likelihood impact

- Implemented statistical methods for voter outreach effectiveness